Watch the video

Quick version? Watch the video. For full context, read the article.

Tuesday afternoon, 3:47 PM. The export works. Sort of. You pulled a list from the system, “quickly cleaned it up” in Excel, imported it back, then assembled a Word report with copy/paste and a few manual tweaks. It looks fine—until someone asks:

- “Which version of the numbers is this?”

- “Why is customer X listed twice?”

- “Who changed that line?”

- “Can we do this next month exactly the same—but with the new requirements?” That’s the moment you realize: this isn’t an incident. This is a pipeline that relies on manual work. Patching one step fixes today’s symptom, but it keeps the underlying cause alive: fragmented truth, disconnected steps, and output that’s only checked at the very end. An “integrated solution” sounds big. In practice, it’s a way to make things smaller: one source of truth, one process, predictable output.

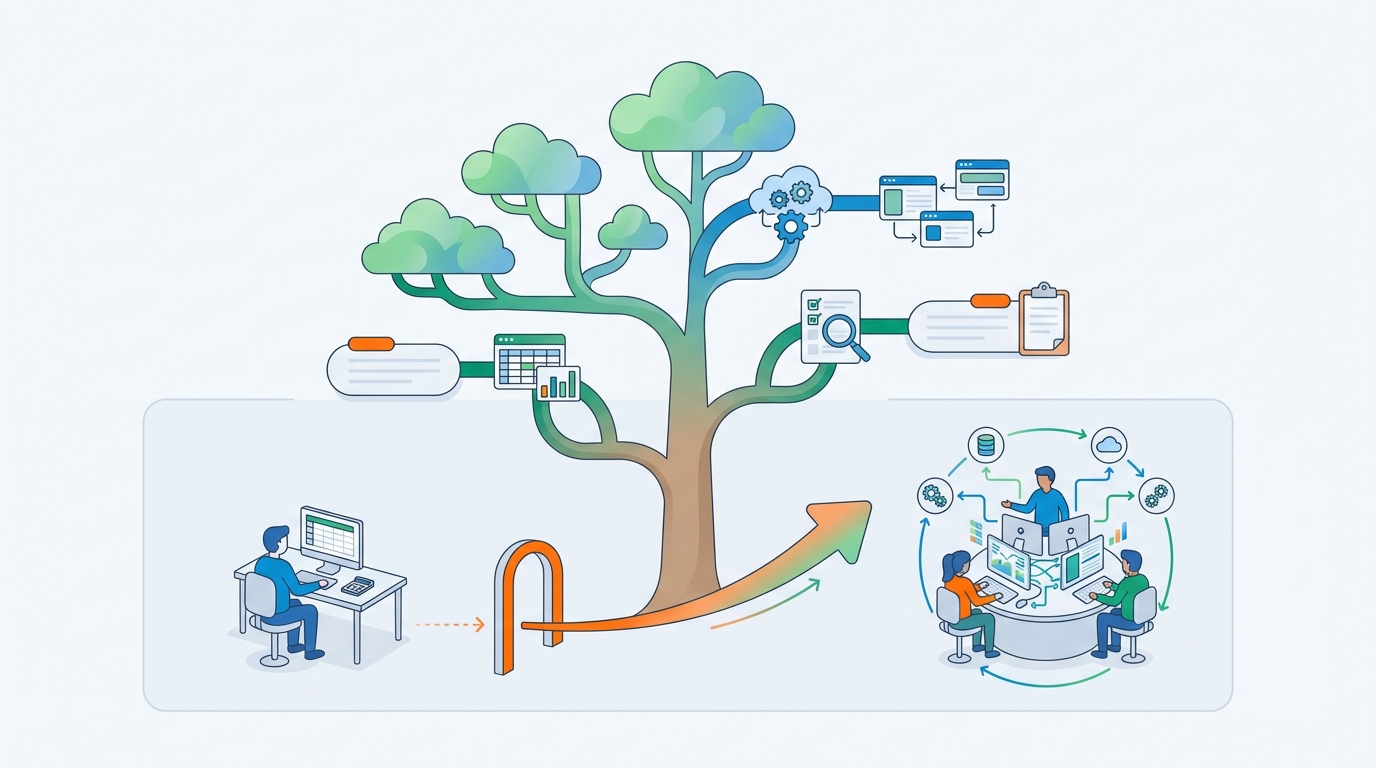

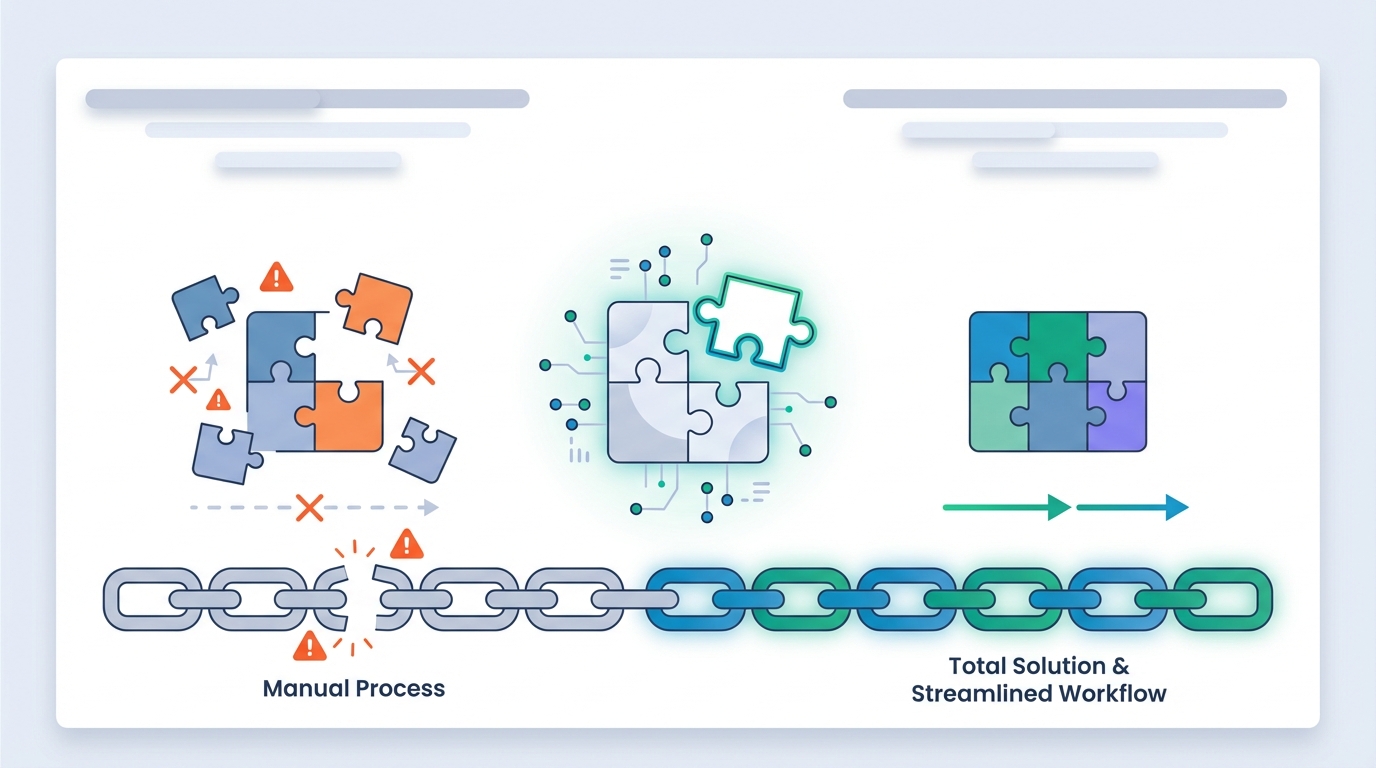

The pattern I keep seeing

Almost every organization starts pragmatically:

- Excel is “quick and handy”.

- Then an off-the-shelf system arrives.

- Then come extra spreadsheets, exports, scripts, and manual checks.

- Eventually, someone becomes “human middleware”. It works—until you grow.

Growth means:

- more people changing data at the same time

- more exceptions (that suddenly become the norm)

- more demands on output (reporting, audits, accessibility, archiving)

- less patience for “we’ll fix it manually”

At that point, it’s not one problem. It’s system behavior.

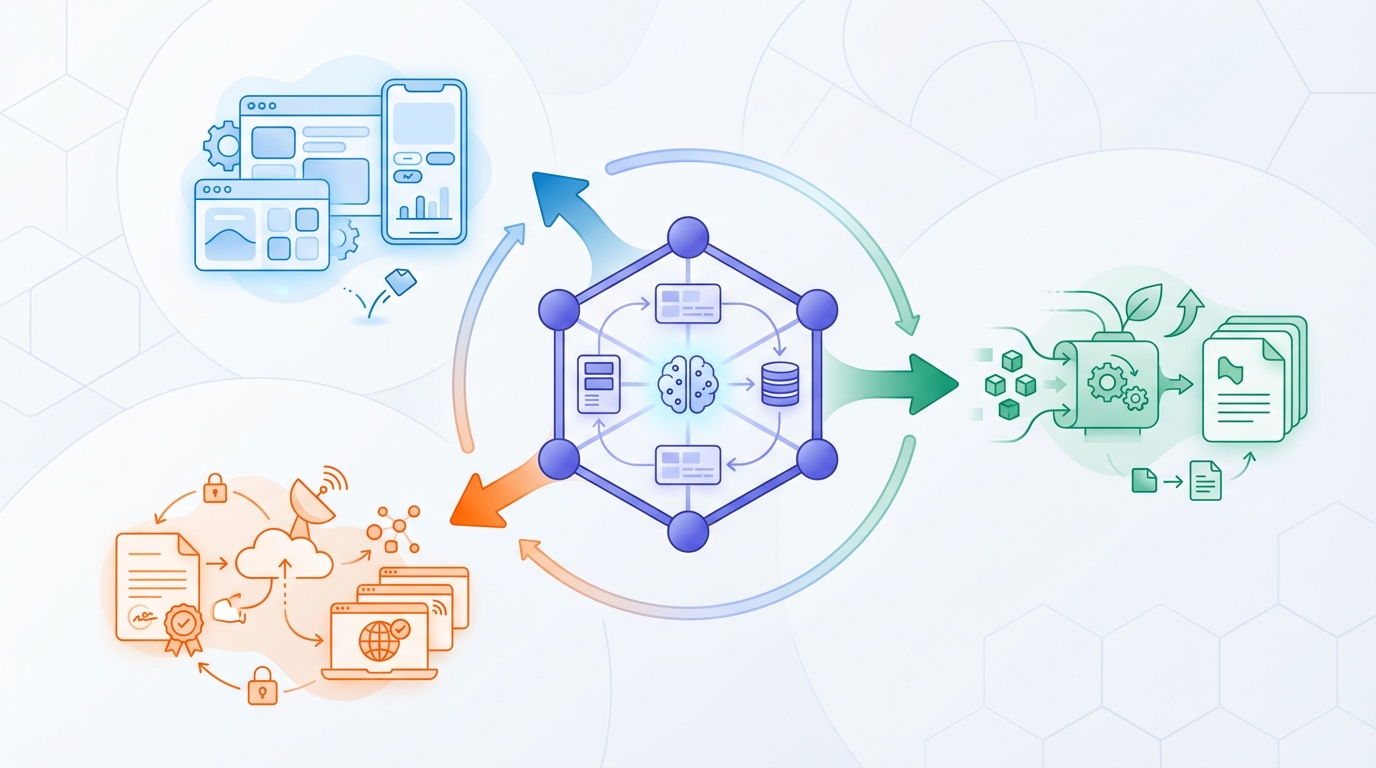

What I mean by “integrated solution”

Not a mega-suite. Not “replace everything because we can”.

Instead: a coherent design where four parts reinforce each other:

- A solid data model (one source of truth)

- A tailored application (your process as the interface)

- Document generation (output without copy/paste)

- Validated publishing (quality gates, no surprises)

That combination is what makes the pipeline stable.

1) Data model first: a source of truth you can trust

A database isn’t the goal. The goal is consistency.

If data lives in multiple places (Excel, an app, a SharePoint list, email threads, “Peter’s planning sheet”), you can automate all you want—but you’re automating chaos.

A strong data model gives you:

- shared definitions (“what exactly is a ‘final’ order?”)

- correct relationships (customer ↔ contract ↔ delivery ↔ invoice)

- enforceable rules (not “tribal knowledge”)

- an audit trail (who changed what, when)

Once truth lives in one place, reliable automation becomes possible.

2) A tailored app: your process, without detours

Off-the-shelf tools are great… until your workflow is just different enough.

Then you get:

- custom fields sprinkled everywhere

- workarounds (“do step 3 in Excel”)

- a process bent around the tool instead of supported by it

At some point, custom software stops being a luxury and becomes a strategic move:

your workflow is part of your value—so it should be encoded in your system.

The advantage of a tailored app on top of a clean model:

- fewer steps, fewer clicks, fewer mistakes

- roles and permissions that match how you actually work

- changes are easier, because you’re not fighting a generic template

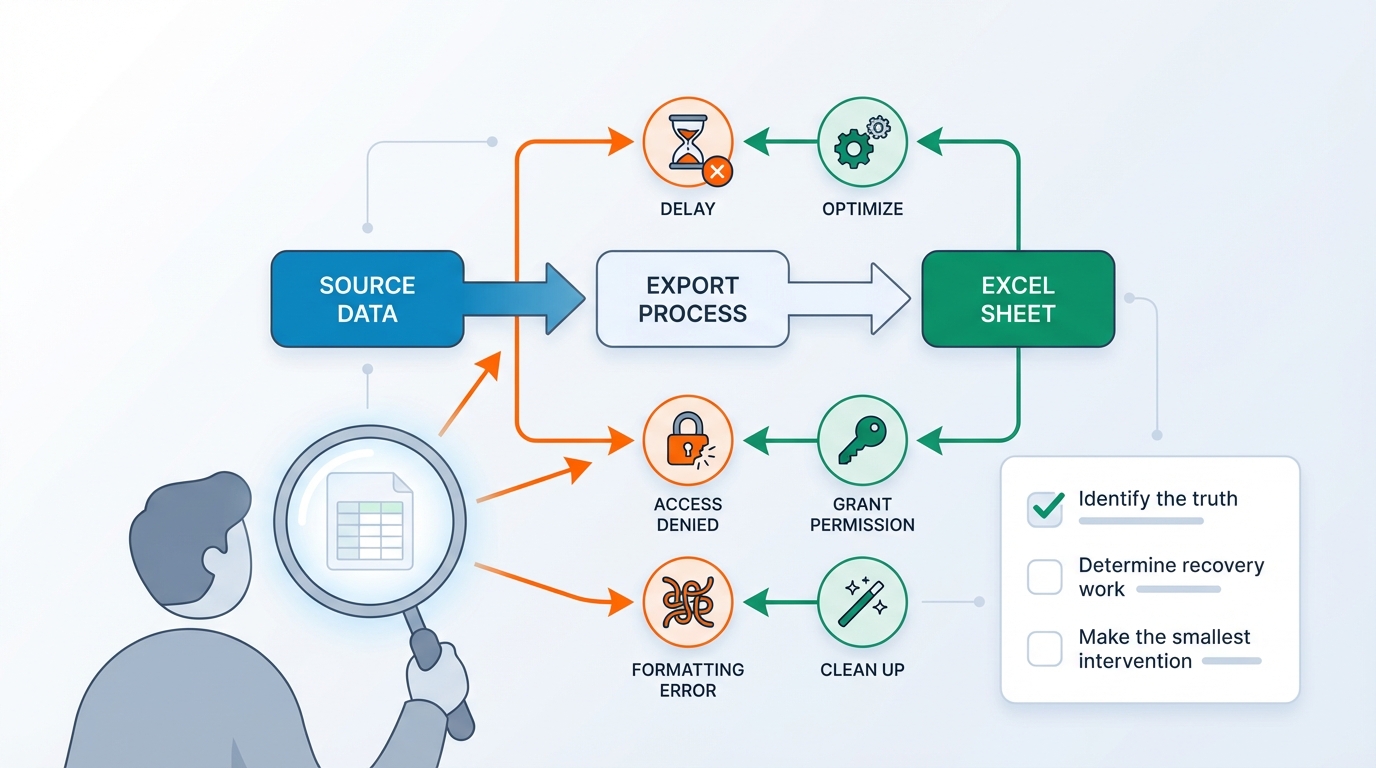

3) Document generation: where issues become visible

Documents are often where pain concentrates:

- reports

- decisions

- proposals

- legal documents

- exports to partners

And that’s where manual reality shows up:

- numbering “almost” correct

- tables shift

- exceptions get missed

- someone edits “just this one thing” in Word

When documents are generated from the same source of truth (the model), output becomes:

- repeatable

- version-controlled (templates)

- testable

- and not dependent on copy/paste

It may feel like “one more step”, but it eliminates a lot of repair work.

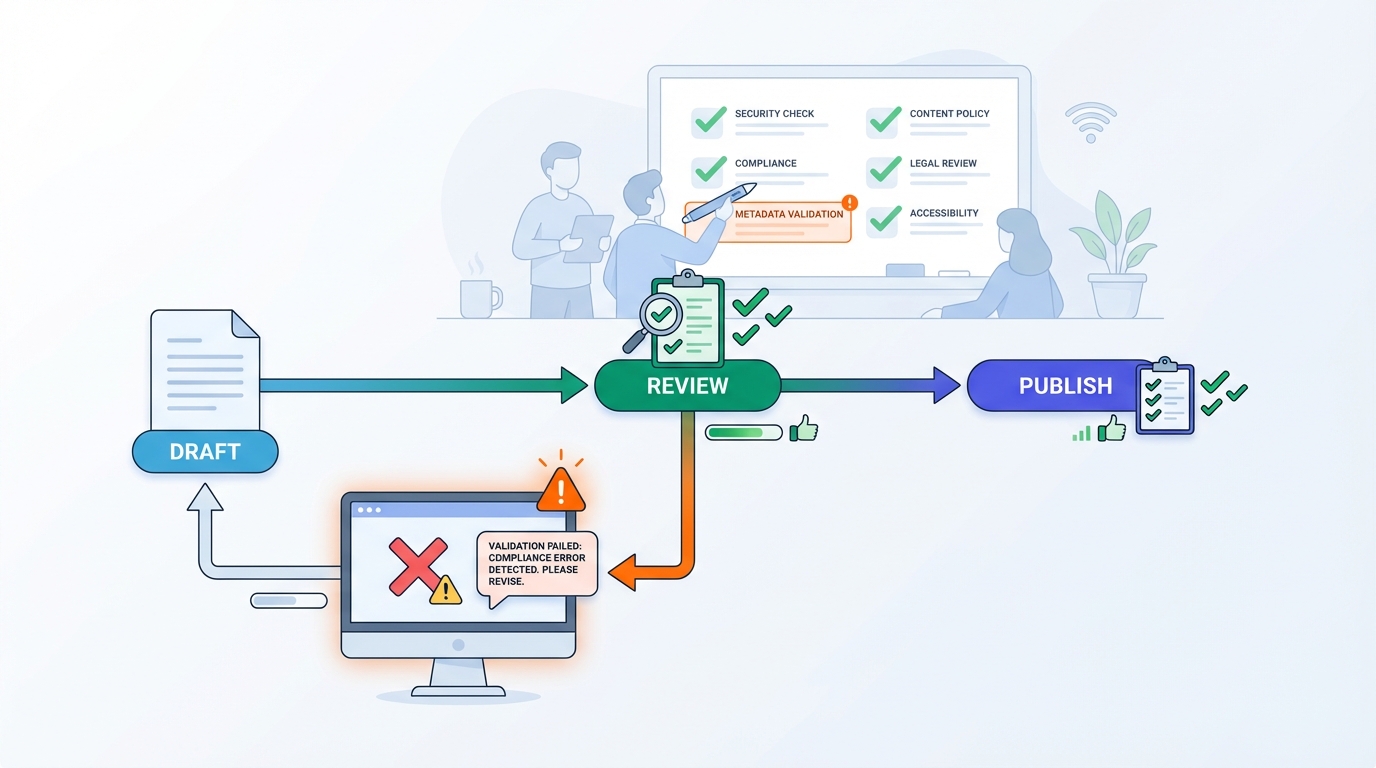

4) Validated publishing: compliance as a quality gate

The biggest stress happens when compliance is only checked at the end.

That’s when you hear:

- “The document is done, but PDF/UA fails.”

- “The metadata isn’t correct.”

- “The validator turned red after a small change.”

The integrated approach flips this: publishing requirements become part of the pipeline.

So you get:

- builds that validate by default

- a pipeline that surfaces problems early

- output that is predictably compliant (e.g., WCAG / PDF/A / PDF/UA), because you continuously enforce it

Compliance stops being a project phase and becomes a system property.

Why this is often faster than fixing things one by one

The irony: “integrated solution” sounds larger, but it prevents endless repetition.

Piecemeal fixes have hidden costs:

- every export is a new chance for mismatch

- every manual step is a new failure point

- every exception requires training and re-training

- every change in one step breaks the next

In a coherent pipeline, you can move faster because:

- definitions live centrally (the model)

- UI and logic follow the same truth

- documents come from the same source

- publishing requirements run as gates

Then change becomes normal—not a risky event.

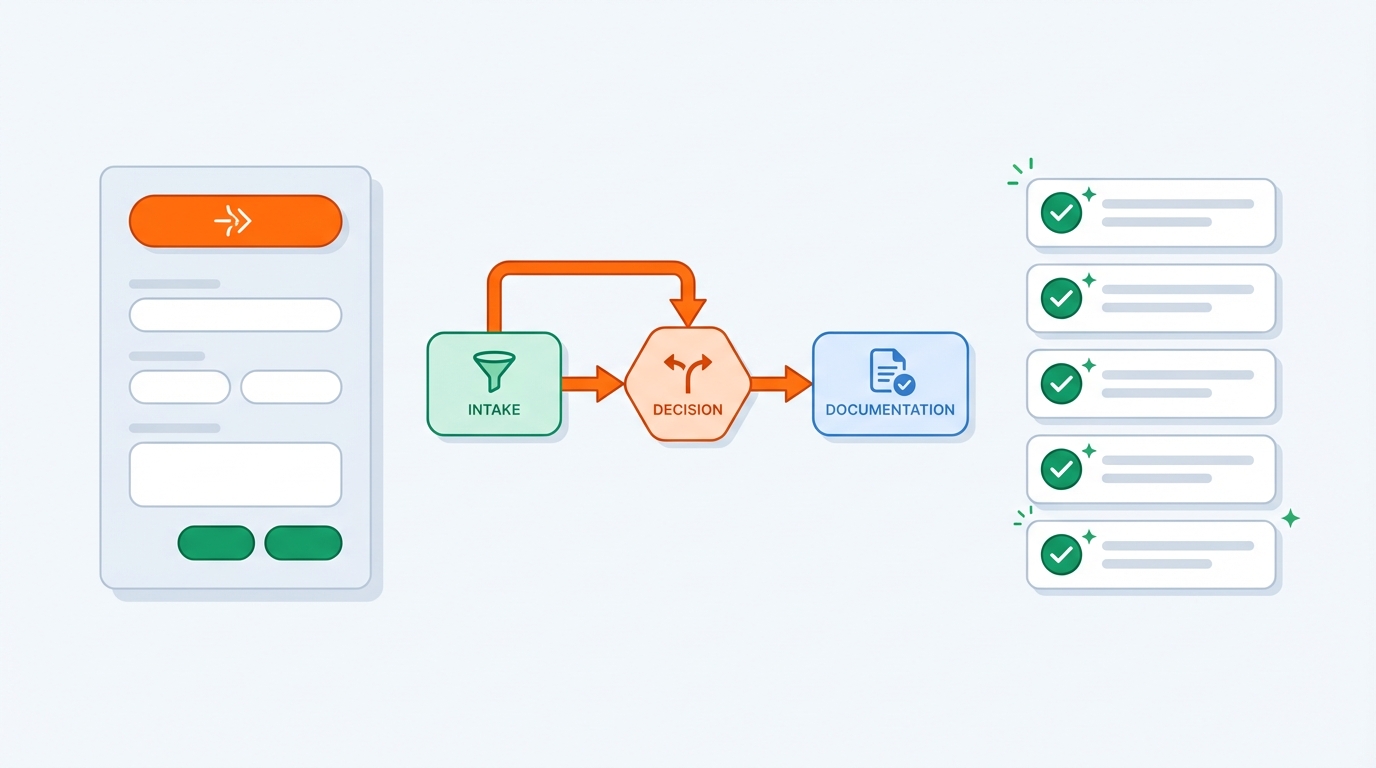

How to start small (without a big bang)

An integrated solution doesn’t mean all-or-nothing. In fact, it shouldn’t.

A practical start:

- Pick one flow where the pain is highest (often: intake → decision → document)

- Create one source of truth for that flow (model + rules)

- Build a thin UI to support that flow

- Generate one document type that causes the most manual work today

- Add one quality gate (a validator that always runs)

Then expand—not by adding “features”, but by extending the pipeline.

Each step delivers immediate value while keeping the long-term direction.

What this delivers in practice

People expect “faster delivery” as the benefit. True—but the bigger win is:

- less manual work

- fewer correction loops

- less dependency on specific individuals

- more calm inside the team

- a pipeline that can evolve with growth and new requirements

In short: you replace human glue with a system that enforces quality.

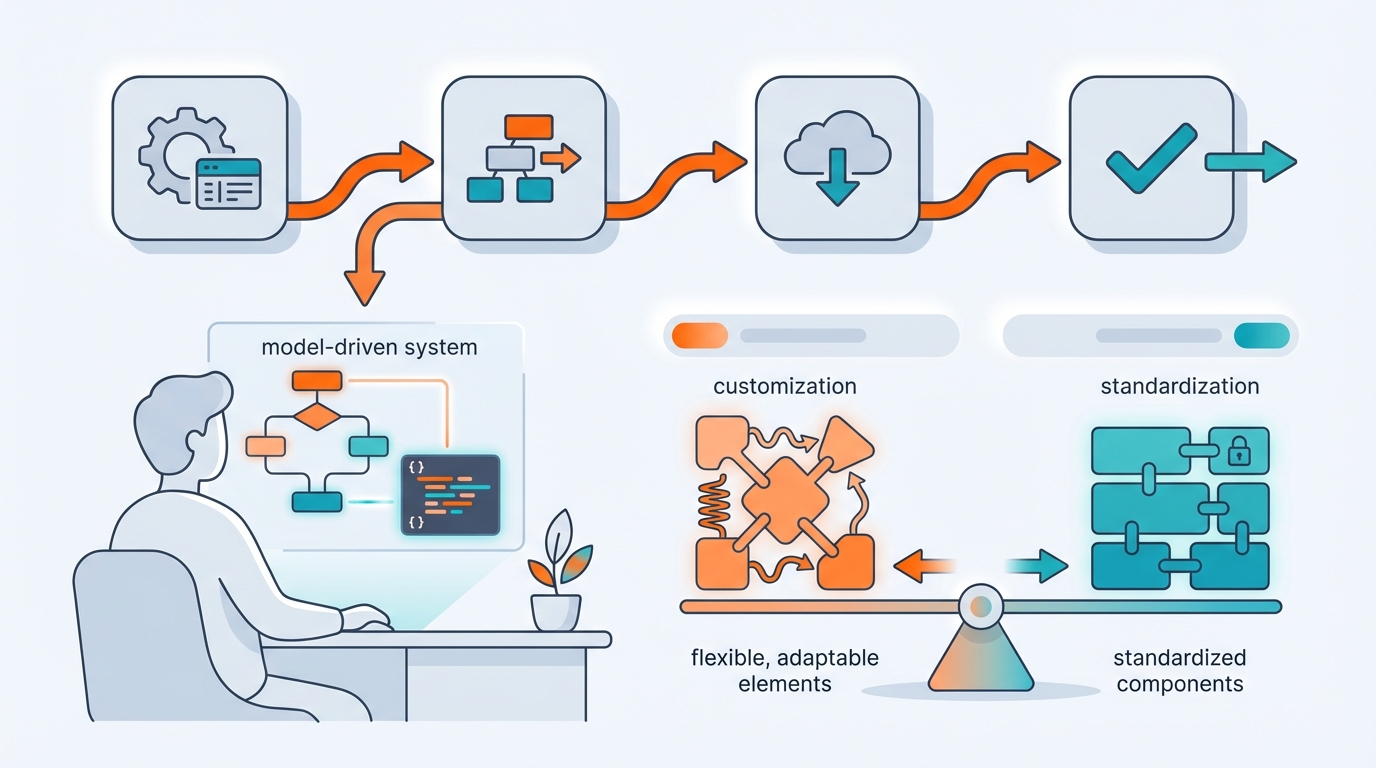

Why this matches Elk Solutions

I build systems that don’t just run—but are also explainable and maintainable.

That’s what an integrated solution is: not a pile of tools, but a pipeline that holds together.

And because I work model-driven (and can automate a lot of the repetitive parts), it stays practical: fast iterations where it’s generic, deliberate engineering where it matters.

Want to sanity-check whether this fits your situation?

If your process currently relies on exports, Excel, manual checks, and “Peter knows how it works”, this conversation is often valuable by itself:

- where does your truth really live today?

- which step creates the most rework?

- what’s the smallest change that immediately reduces stress?

If you want, send me a short description of your flow (even bullet points). I’ll suggest a sensible first step.